Research on nighttime intelligent monitoring method for deep-sea cage fish school based on water surface infrared images

-

摘要:

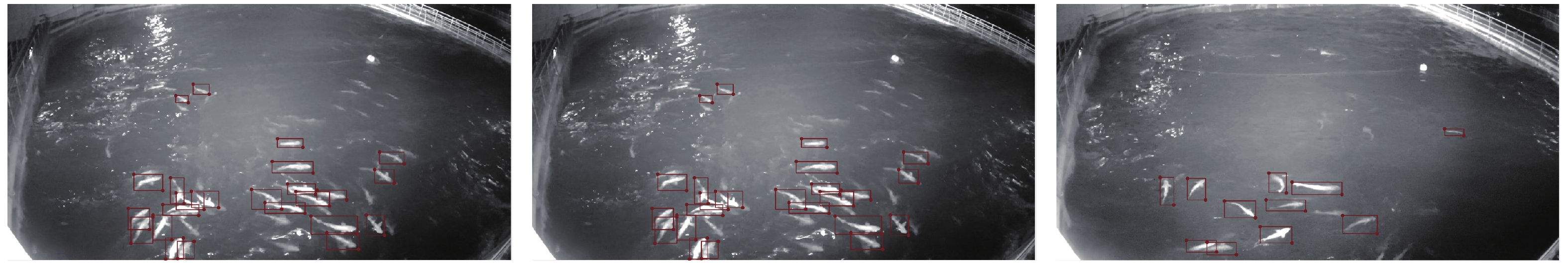

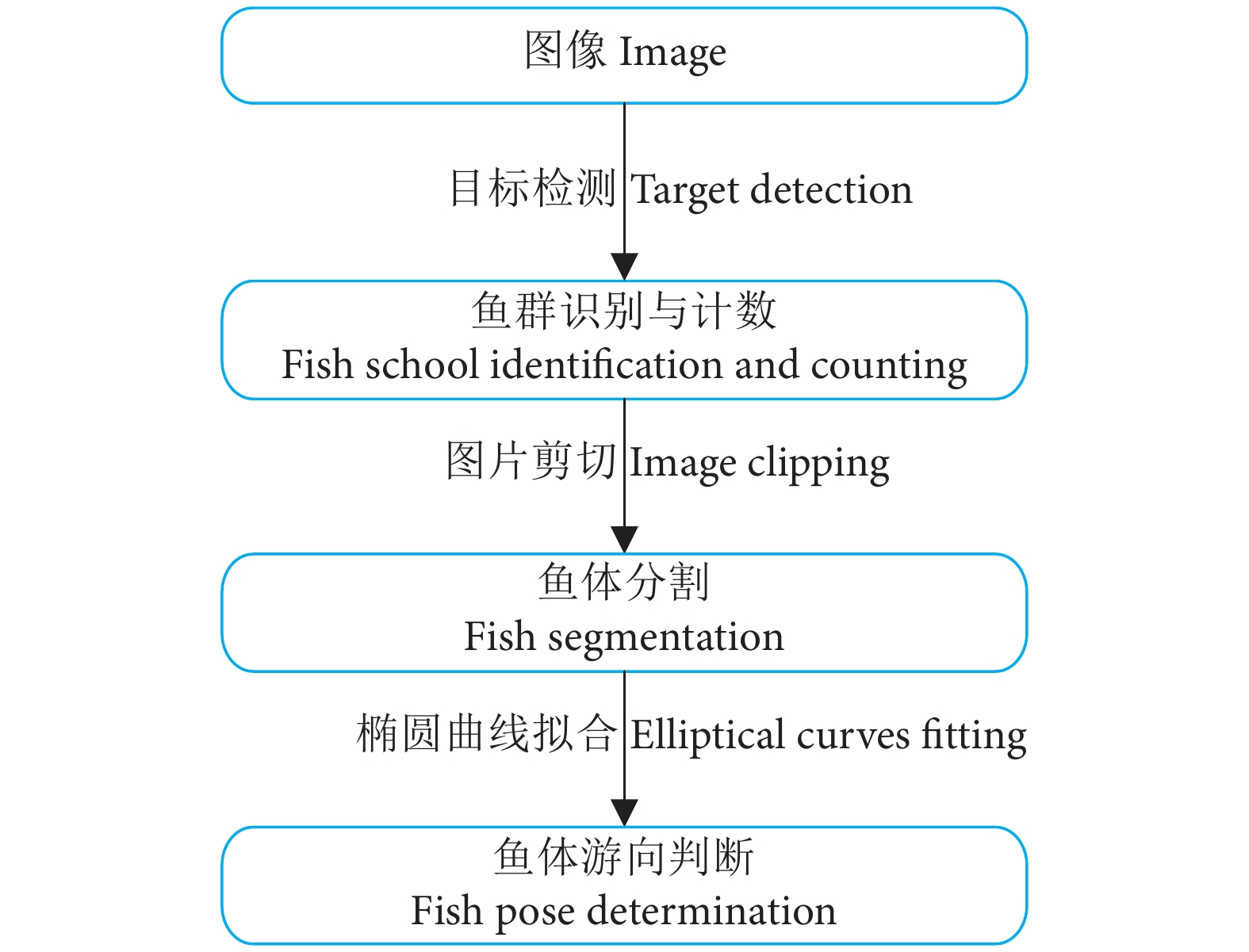

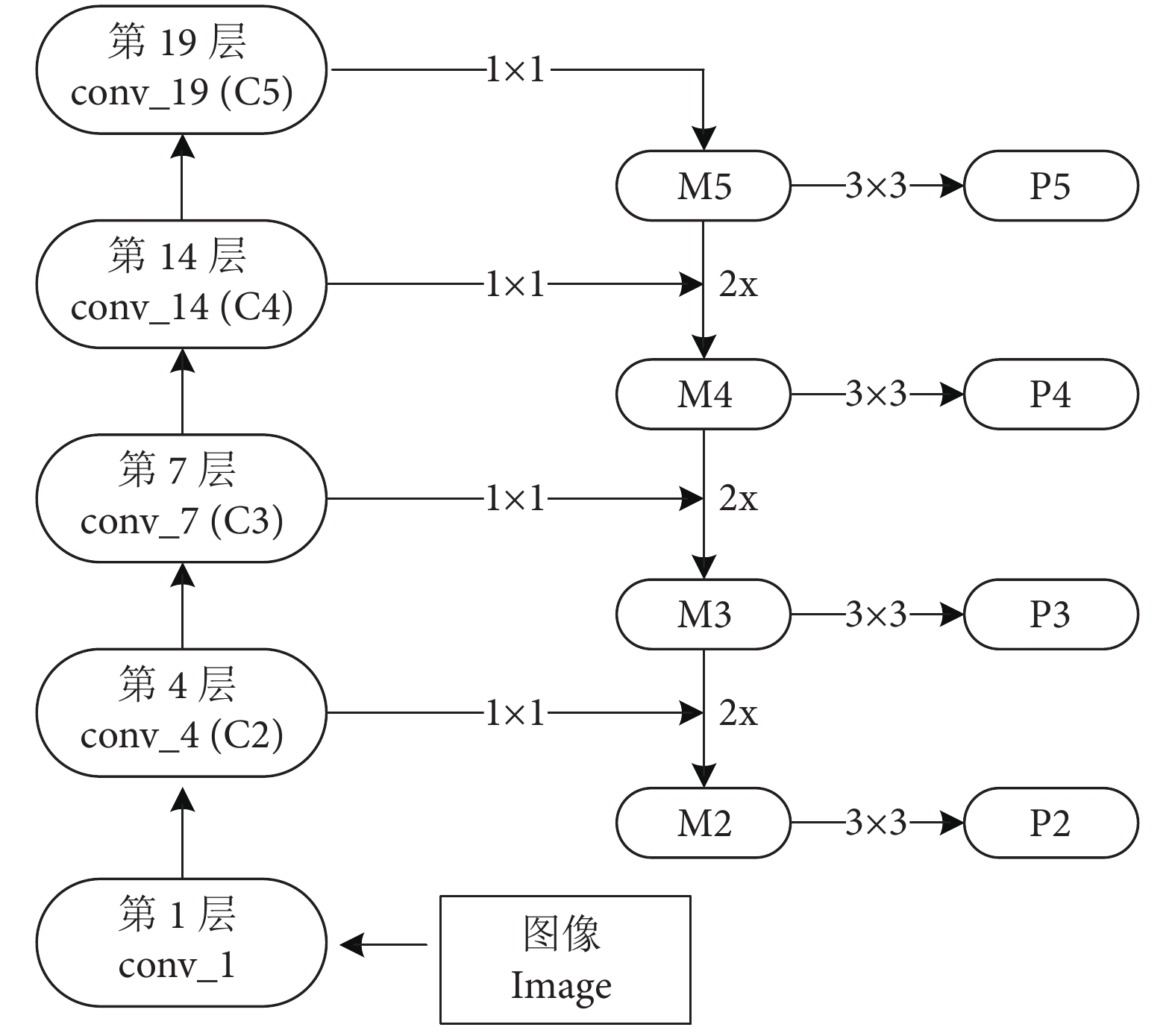

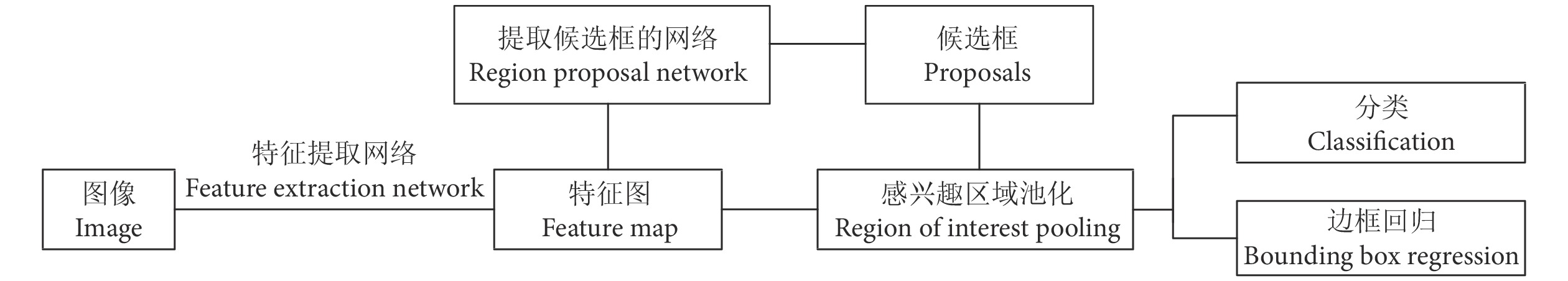

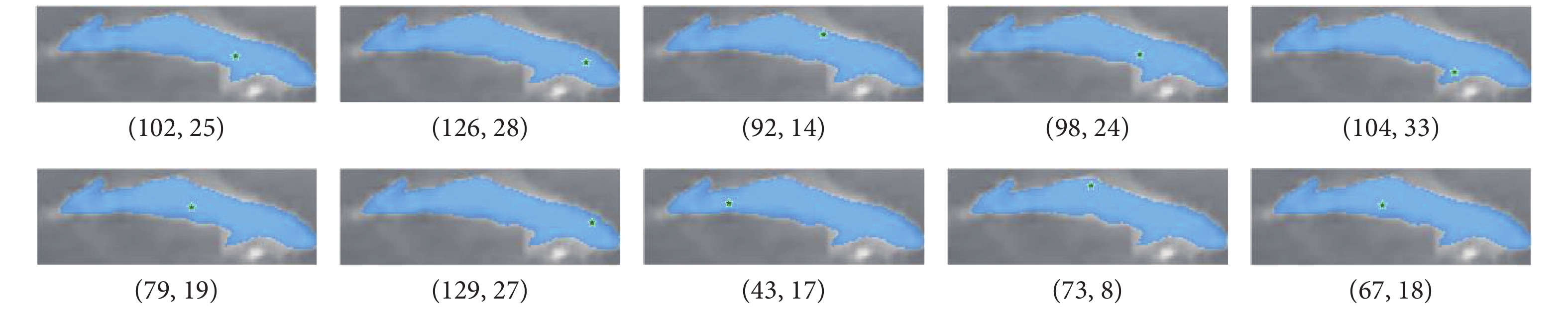

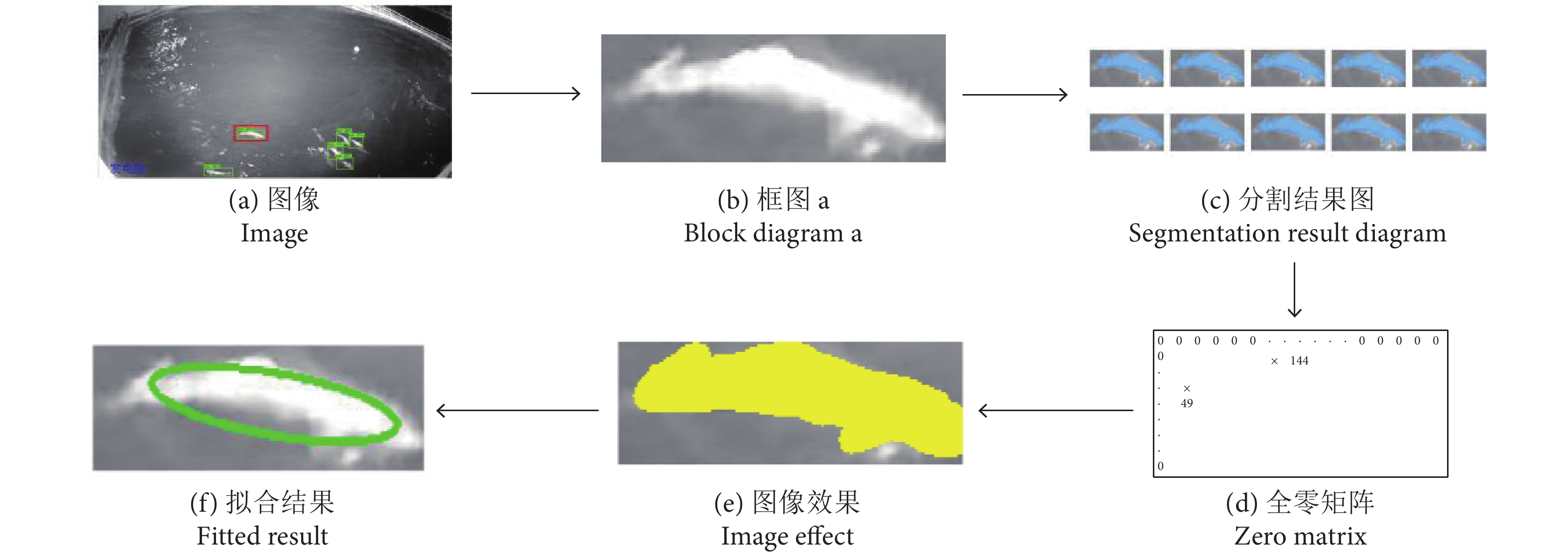

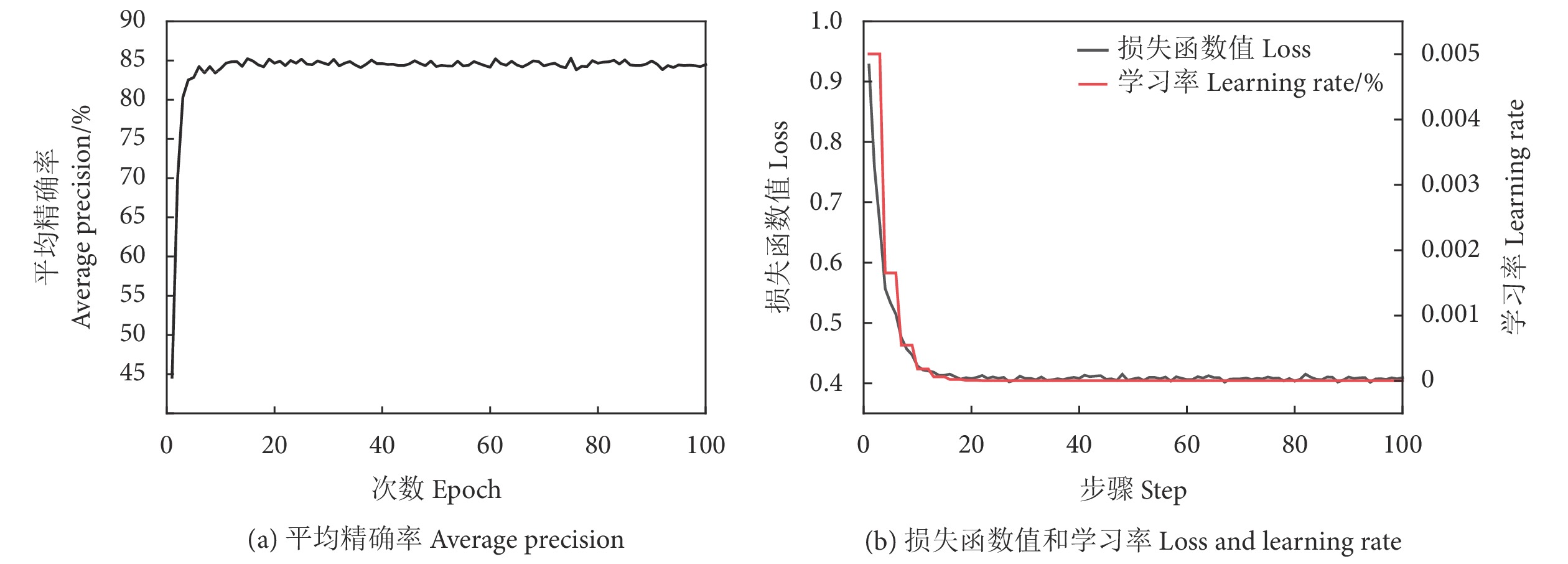

获取深海网箱养殖过程鱼群活动数据,开展鱼群监测是提升深海养殖效率、降低养殖成本的有效手段。基于水面红外相机,利用深度学习前沿技术,提出了一种鱼群智能监测方法。该方法涉及鱼群识别及计数、鱼体分割和鱼体游向判断3个功能模块。首先,通过红外相机采集鱼类的图像信息,并进行标注以构建数据集,然后采用改进的Faster RCNN 模型,以Mobilenetv2+FPN网络作为特征提取器,实现鱼类的准确识别,并输出包围框表征鱼类个体位置。其次,从框图内选择亮度前20%的像素点作为分割提示点,利用Segment Anything Model对图像进行分割,生成鱼体分割图。最后,通过对鱼体分割图进行椭圆拟合处理,可以判定鱼类的游向信息。改进的Faster RCNN 模型在进行100次迭代训练后,平均精确率达到84.5%,每张图片的检测时间为0.042 s。结果表明,在水面红外图像的鱼类数据集上,所提出的改进Faster RCNN 模型和椭圆拟合等关键技术能够实现对鱼群的自动监测。

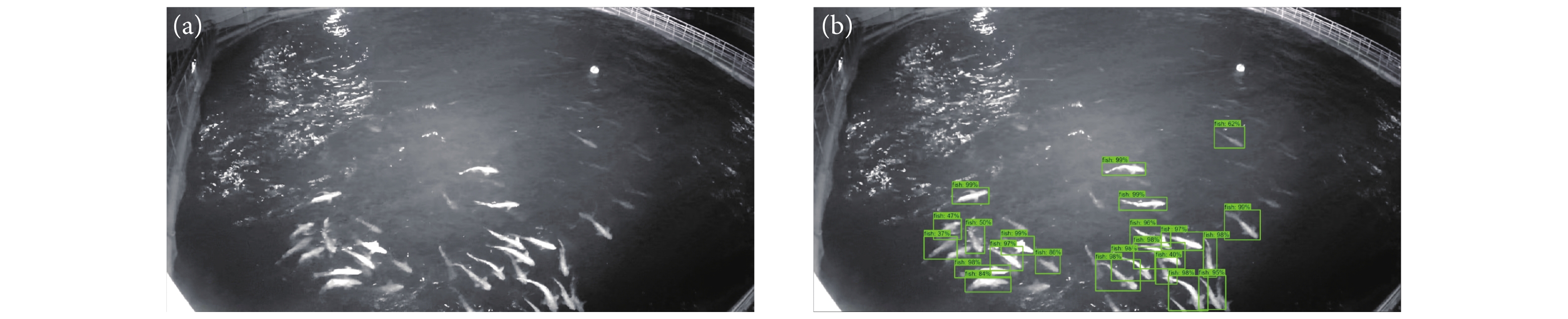

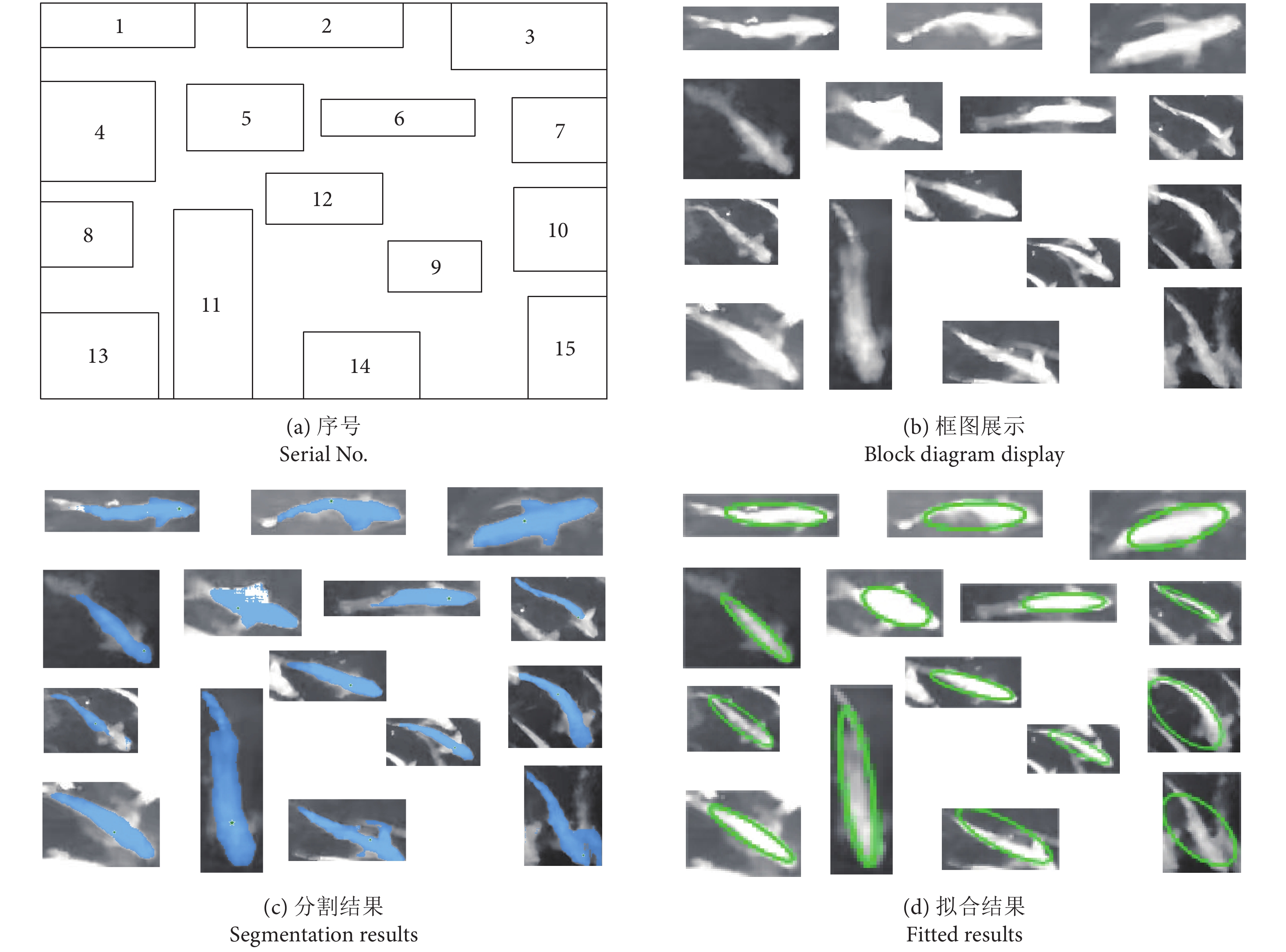

Abstract:Obtaining fish school information on its size and behavior through fish school monitoring is an important way to improve the efficiency of deep sea aquaculture and reduce costs. In this study, an intelligent fish school monitoring method is proposed by using infrared cameras mounted on a net cage for data collection, in addition to the latest deep learning techniques for model training. The method involves three functional modules: fish detection, fish segmentation and fish pose determination. Firstly, fish images were collected by infrared cameras and manually annotated to build datasets, while an improved faster RCNN model that uses Mobilenetv2 and FPN network as feature extractors to improve detection accuracy is adopted to output bounding boxes of individual fish. Secondly, the top 20% of brightness pixels in the block map were selected as segmentation prompt points, and the image was segmented using Segment Anything Model to generate fish segmentation results. Finally, the fish pose information was determined by applying elliptical fitting using fish segmentation results. After 100 epochs of training, the average precision (AP) of the improved Faster RCNN model reached 84.5%, and the detection time per image was 0.042 s. The results indicate that the proposed method can achieve automatic monitoring of fish school on infrared images and extract effective information.

-

Keywords:

- Deep-sea cage /

- Fish school monitoring /

- Infrared image /

- Object detection /

- Instance segmentation

-

-

表 1 实验工作平台环境

Table 1 Experimental platform environment

名称

Name版本及规格参数

Version and specifications操作系统

Operating systemWindows 11 显存

GPUNVIDIA GeForce RTX 3060 Ti 中央处理器

CPUIntel Core i5-12400F六核 内存

Internal storage32 G 编程语言

Programming languagePython 3.8 网络开发框架

Framework for network developmentPytorch 1.10 统一设备架构

CUDA (Compute unified device architecture)11.3 深度神经网络库

cuDNN (NVIDIA CUDA deep neural network library)8.2 表 2 不同的特征提取网络对比

Table 2 Comparison of different feature extraction networks

特征提取网络

Feature extraction

network平均

精确率

Average precision

(AP)/%平均

召回率

Average recall

(AR)/%每张图片

检测时间

Detection time

per image/sMobilenetv2 70.0 44.5 0.039 Mobilenetv2+FPN 84.5 60.8 0.042 VGG16 84.9 61.0 0.074 VGG16+FPN 86.0 64.4 0.077 Resnet50 83.1 59.5 0.044 Resnet50+FPN 83.5 60.0 0.062 -

[1] 2022年全国渔业经济统计公报发布[J]. 水产科技情报, 2023, 50(4): 270. [2] 2021年全国渔业经济统计公报[J]. 水产科技情报, 2022, 49(5): 313. [3] 王向仁. “蓝色粮仓”行业发展现状、问题制约及对策建议[J]. 农业灾害研究, 2023, 13(8): 286-288. [4] 本刊讯. 农业农村部部署深远海养殖工作[J]. 中国水产, 2023(7): 7. [5] YUAN B, CUI Y H, AN D, et al. Marine environmental pollution and offshore aquaculture structure: evidence from China[J]. Front Mar Sci, 2023, 9: 979003. doi: 10.3389/fmars.2022.979003

[6] 张涵钰, 李振波, 李蔚然, 等. 基于机器视觉的水产养殖计数研究综述[J]. 计算机应用, 2023, 43(9): 2970-2982. [7] DHAKAL A, PANDEY M, KAYASTHA P, et al. An overview of status and development trend of aquaculture and fisheries in Nepal[J]. Adv Agric, 2022: 1-16.

[8] WANG C, LI Z, WANG T, et al. Intelligent fish farm-the future of aquaculture[J]. Aquac Int, 2021, 29(6): 2681-2711. doi: 10.1007/s10499-021-00773-8

[9] 郭戈, 王兴凯, 徐慧朴. 基于声呐图像的水下目标检测、识别与跟踪研究综述[J]. 控制与决策, 2018, 33(5): 906-922. [10] 陈超, 赵春蕾, 张春祥, 等. 物联网声呐感知研究综述[J]. 计算机科学, 2020, 47(10): 9-18. [11] VOLOSHCHENKO V Y, VOLOSHCHENKO A P, VOLOSHCHENKO E V. Seadrome: unmanned amphibious aerial vehicle sonar equipment for landing-takeoff and water area navigation[J]. Rus Aeronaut, 2020, 63(1): 155-163. doi: 10.3103/S1068799820010225

[12] 崔智强, 祝捍皓, 宋伟华, 等. 一种基于前视声呐的养殖网箱内鱼群数量评估方法[J]. 渔业现代化, 2023, 50(4): 107-117. [13] BENOIT-BIRD K J, WALUK C M. Remote acoustic detection and characterization of fish schooling behavior[J]. J Acoust Soc Am, 2021, 150(6): 4329-4342. doi: 10.1121/10.0007485

[14] YUAN X W, JIANG Y Z, ZHANG H, et al. Quantitative assessment of fish assemblages on artificial reefs using acoustic and conventional netting methods, in Xiangshan Bay, Zhejiang Province, China[J]. J Appl Ichthyol, 2021, 37(3): 389-399. doi: 10.1111/jai.14157

[15] 崔斌. 视觉识别技术在智慧实验室中的应用研究[J]. 信息与电脑(理论版), 2023, 35(8): 172-174. [16] 李少波, 杨玲, 于辉辉, 等. 水下鱼类品种识别模型与实时识别系统[J]. 智慧农业(中英文), 2022, 4(1): 130-139. [17] 黄平. 基于深度学习的鱼类摄食行为识别及精准养殖研究[D]. 南宁: 广西大学, 2022: 7. [18] LAI Y C, HUANG R J, KUO Y P, et al. Underwater target tracking via 3D convolutional networks[C]//2019 IEEE 6th International Conference on Industrial Engineering and Applications (ICIEA). IEEE, 2019: 485-490.

[19] 傅梁著. 基于视觉感知技术的鱼类行为辨别方法研究[D]. 大连: 大连理工大学, 2022: 15. [20] FENG S X, YANG X T, LIU Y, et al. Fish feeding intensity quantification using machine vision and a lightweight 3D ResNet-GloRe network[J]. Aquac Engin, 2022: 102244.

[21] YANG L, CHEN Y Y, SHEN T, et al. A BlendMask-VoVNetV2 method for quantifying fish school feeding behavior in industrial aquaculture[J]. Comput Electron Agr, 2023, 211: 108005. doi: 10.1016/j.compag.2023.108005

[22] 黄小华, 庞国良, 袁太平, 等. 我国深远海网箱养殖工程与装备技术研究综述[J]. 渔业科学进展, 2022, 43(6): 121-131. [23] REN S Q, HE K M, GIRSHICK R, et al. Faster R-CNN: towards real-time object detection with Region Proposal Networks[J]. IEEE T Pattern Anal, 2017, 39(6): 1137-1149. doi: 10.1109/TPAMI.2016.2577031

[24] YASHASWINI K, SRINIVASA A H, GOWRISHANKAR S. Fish species detection using deep learning for industrial applications[C]//Proceedings of Third International Conference on Communication, Computing and Electronics Systems: ICCCES 2021. Singapore: Springer Singapore, 2022: 401-408.

[25] YANG X T, ZHANG S, LIU J T, et al. Deep learning for smart fish farming: applications, opportunities and challenges[J]. Rev Aquac, 2021, 13(1): 66-90. doi: 10.1111/raq.12464

[26] 姚文清, 李盛, 王元阳. 基于深度学习的目标检测算法综述[J]. 科技资讯, 2023, 21(16): 185-188. [27] WANG H, JIANG S F, GAO Y. Improved object detection algorithm based on Faster RCNN[J]. J Physics: Conference Series, 2022, 2395(1): 012069. doi: 10.1088/1742-6596/2395/1/012069

[28] LIN T Y, DOLLAR P, GIRSHICK R, et al. Feature pyramid networks for object detection[C]//Proceedings of the IEEE conference on computer vision and pattern recognition. 2017: 2117-2125.

[29] SANDLER M, HOWARD A, ZHU M L, et al. Mobilenetv2: inverted residuals and linear bottlenecks[C]//Proceedings of the IEEE conference on computer vision and pattern recognition. 2018: 4510-4520.

[30] 康晓凤, 厉丹. 基于SSD-MobileNetv2和FPN的人脸检测[J]. 电子器件, 2023, 46(2): 455-462. [31] KIRILLOV A, MINTUN E, RAVI N, et al. Segment anything[J]. Arxiv Preprint Arxiv: 2304.02643, 2023.

[32] 黄佳慧. 基于计算机视觉的光条中心点椭圆拟合算法[J]. 信息技术与信息化, 2023(4): 137-140. [33] 王景洲. 基于平面点集最佳拟合椭圆方法的优化[J]. 电子技术与软件工程, 2022(21): 198-202.

下载:

下载:

粤公网安备 44010502001741号

粤公网安备 44010502001741号